Natural Eye-based Gaze Interaction |  Shared Physiological Cues |  G-SIAR |

|---|---|---|

KITE-Mobile AR |  Gestures Library |  User-defined Gestures for AR |

Physically-based Interaction in AR |

User-defined Gestures for AR

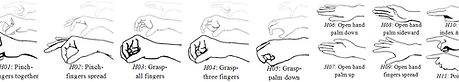

We applied a guessibility study to obtain user-centered designed gestures for AR. Previous research had primarily focused on their proposed gestures, while little is known about user preferences and behavior interacting in AR.

We present the result of our study where 800 gestures were elicited for 40 selected tasks from 20 participants. Using the agreement found among gestures, a user-defined gesture set was created.

In term of research contributions, we extended Wobbrock’s surface taxonomy to cover dimensionalities in AR and with it, we derived the characteristics of collected gestures. We shared common motifs from this study for a better understanding of users’ thought and behavior.

We hope that our gesture set will assist designers in creating better and a more consistent user-centered gestures in AR.

T. Piumsomboon, A. Clark, M. Billinghurst and A. Cockburn. User-Defined Gestures for Augmented Reality. In Human-Computer Interaction – INTERACT'13, P. Kotzé, G. Marsden, G. Lindgaard, J. Wesson and M. Winckler, Eds. Springer Berlin Heidelberg, pp. 282-299, 2013.

T. Piumsomboon, A. Clark, M. Billinghurst and A. Cockburn. User-defined gestures for augmented reality. In Proceedings of the CHI '13 Extended Abstracts on Human Factors in Computing Systems (CHI'13), pp. 955-960, 2013.